How to make sense of web speed metrics, accurately measure your site’s performance and the impact it has on your bottom line.

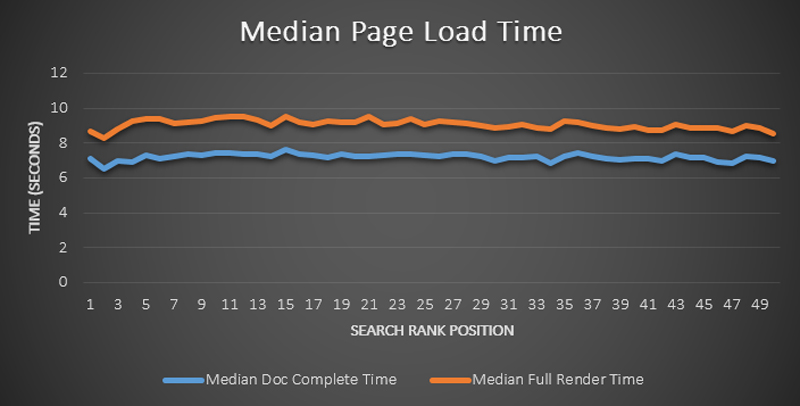

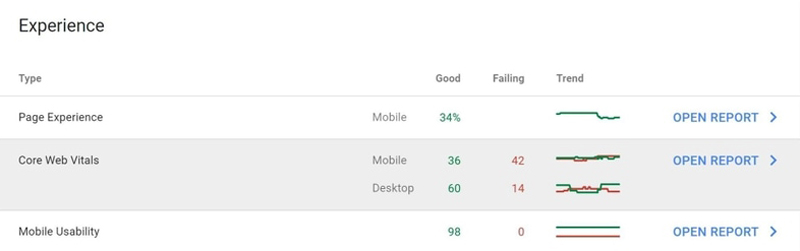

If you own or manage a website, you’d have to be living blissfully under a pretty large rock to miss all the buzz about the rising importance of website speed. The announced Google Search algorithm update has the whole industry in a reluctant frenzy, with developers, agencies, and SEOs scrambling to figure out just what the new requirements will be, how their websites may be affected, and what they can do to prepare for the imminent future.

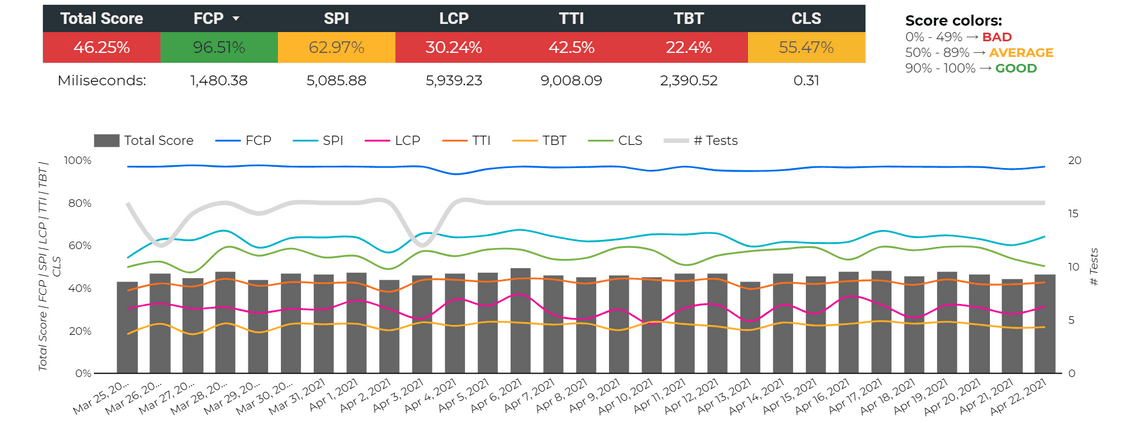

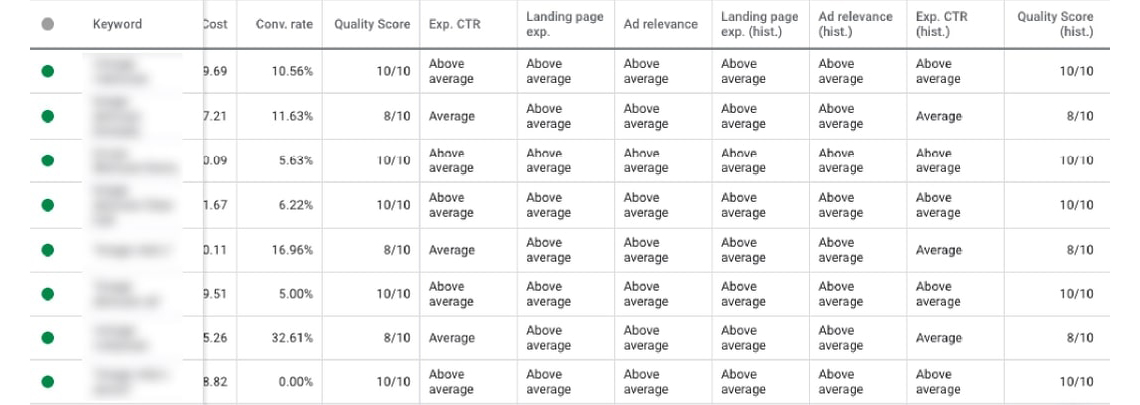

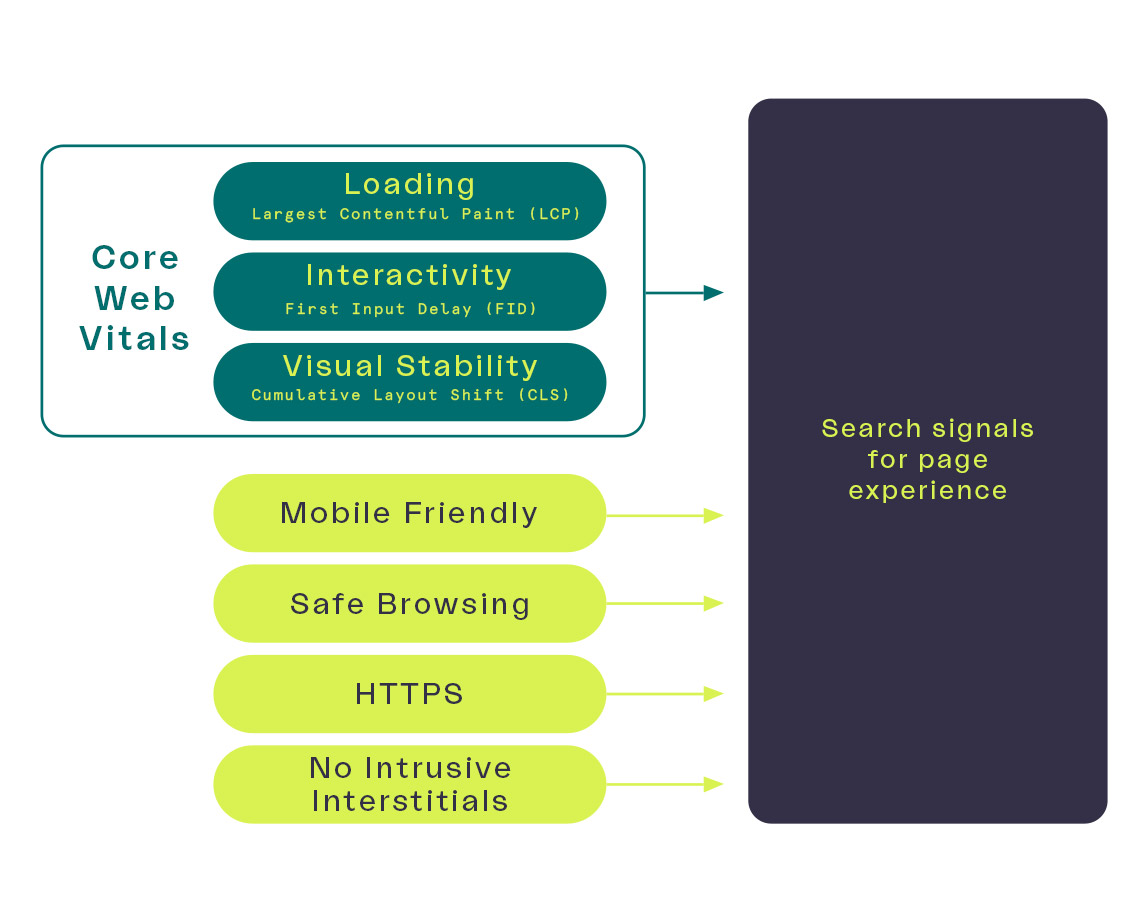

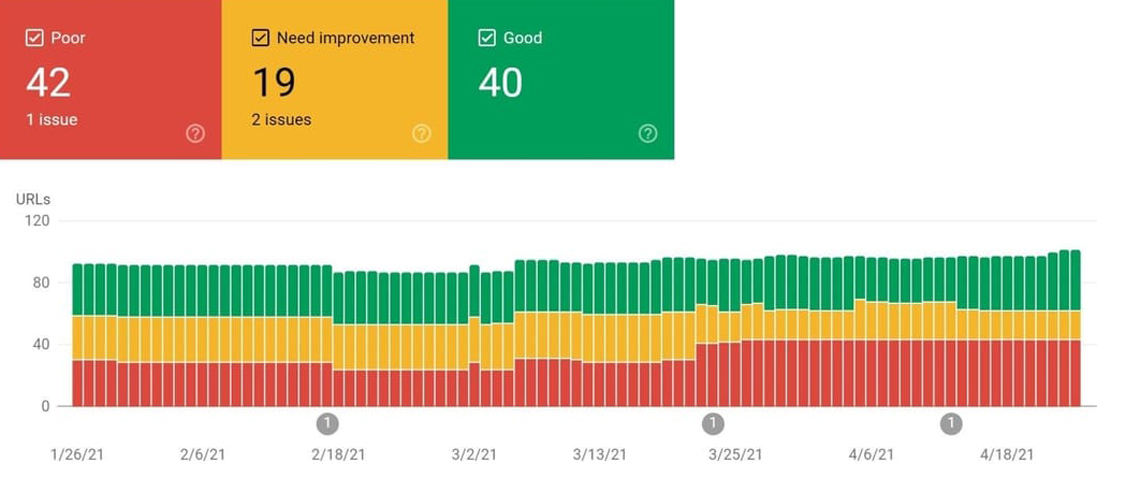

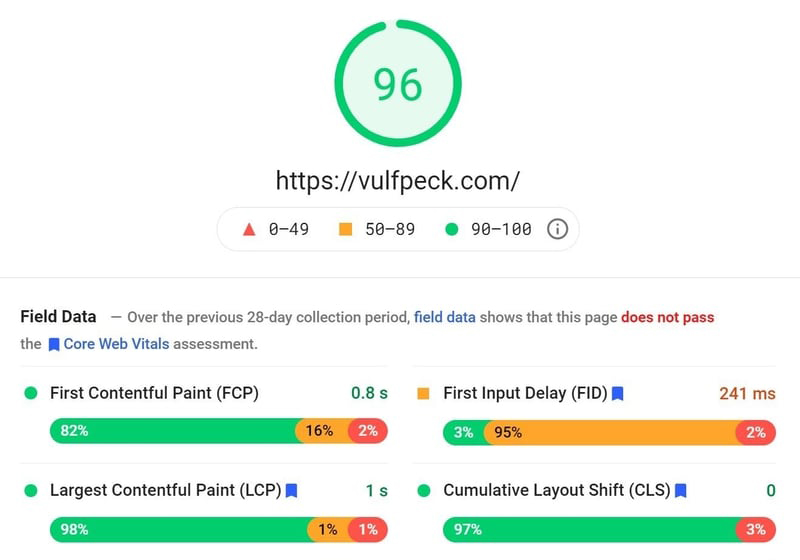

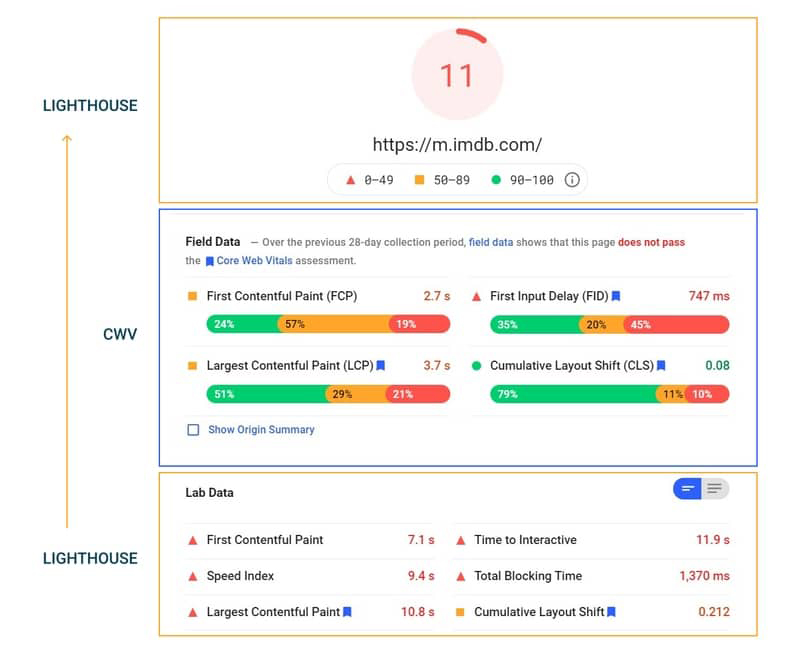

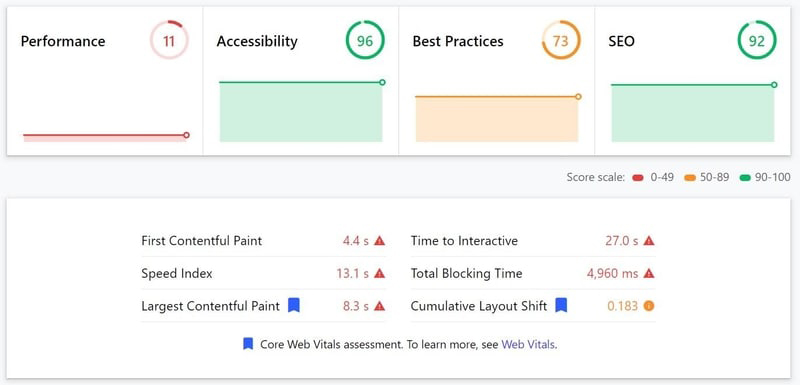

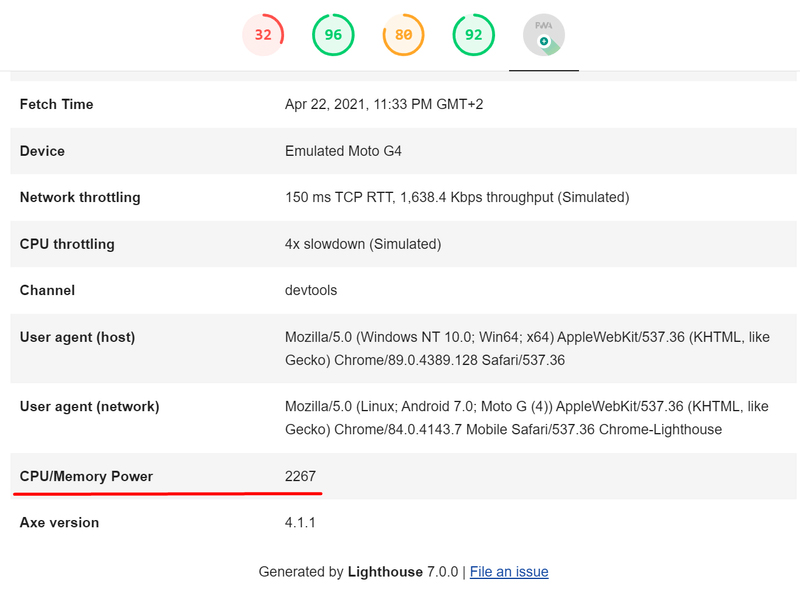

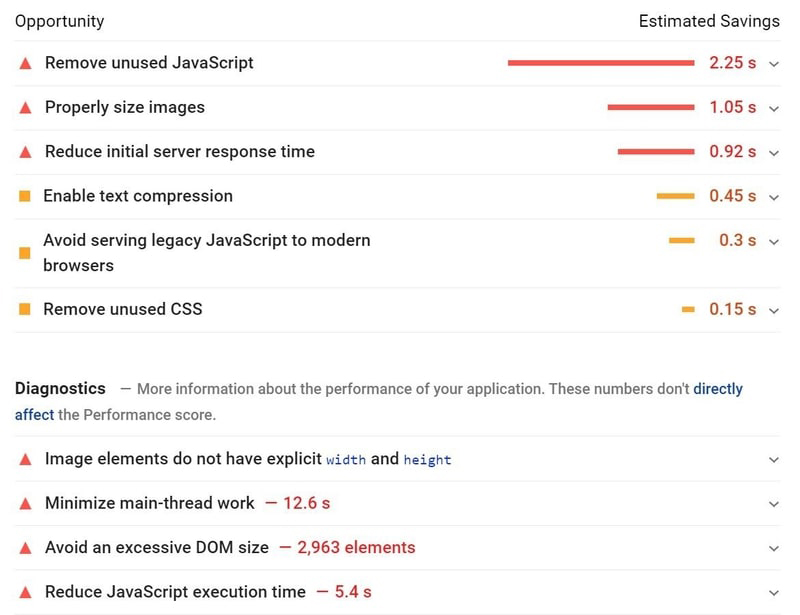

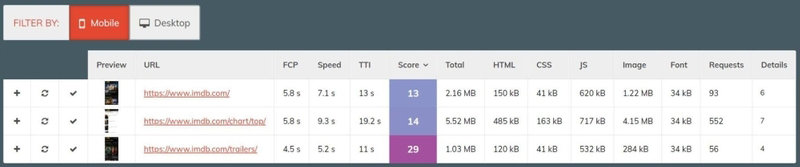

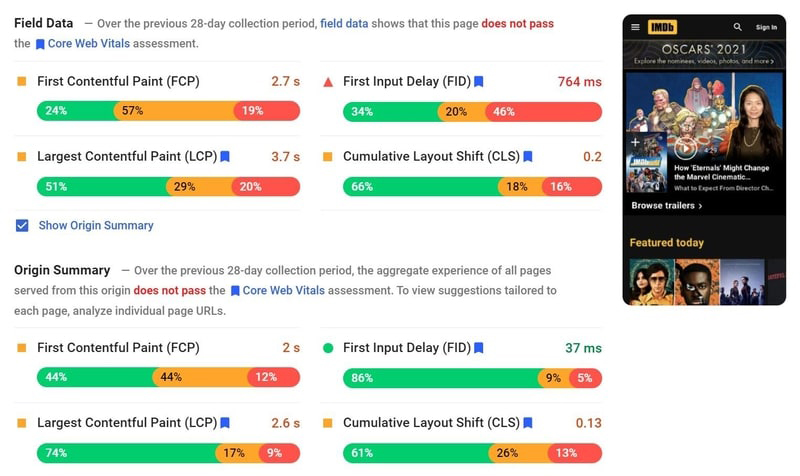

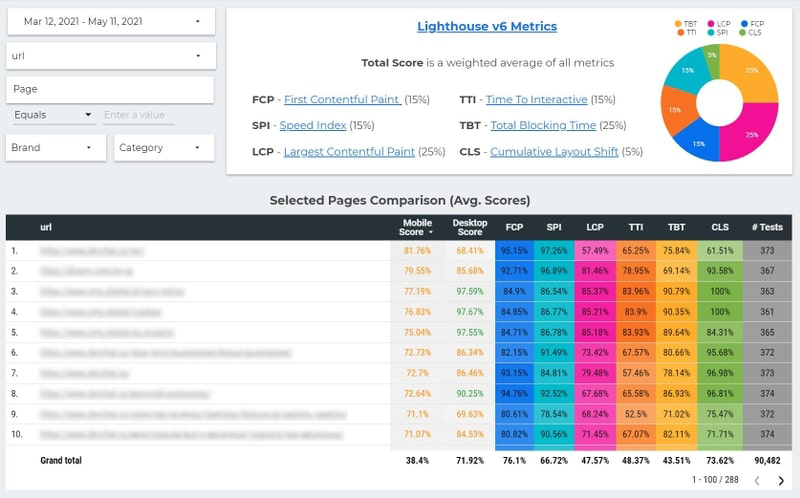

This article will cover the latest news about the Google “Page Experience” search algorithm update (with Core Web Vitals), advice on using tools like Lighthouse and PageSpeed Insights, and a showcase of our approach for reliable and insightful website performance measurement.

We’ve got a lot of ground to cover, so grab yourself a hot beverage and a box of pretzels, and turn off notifications for about 40 minutes of casual reading.

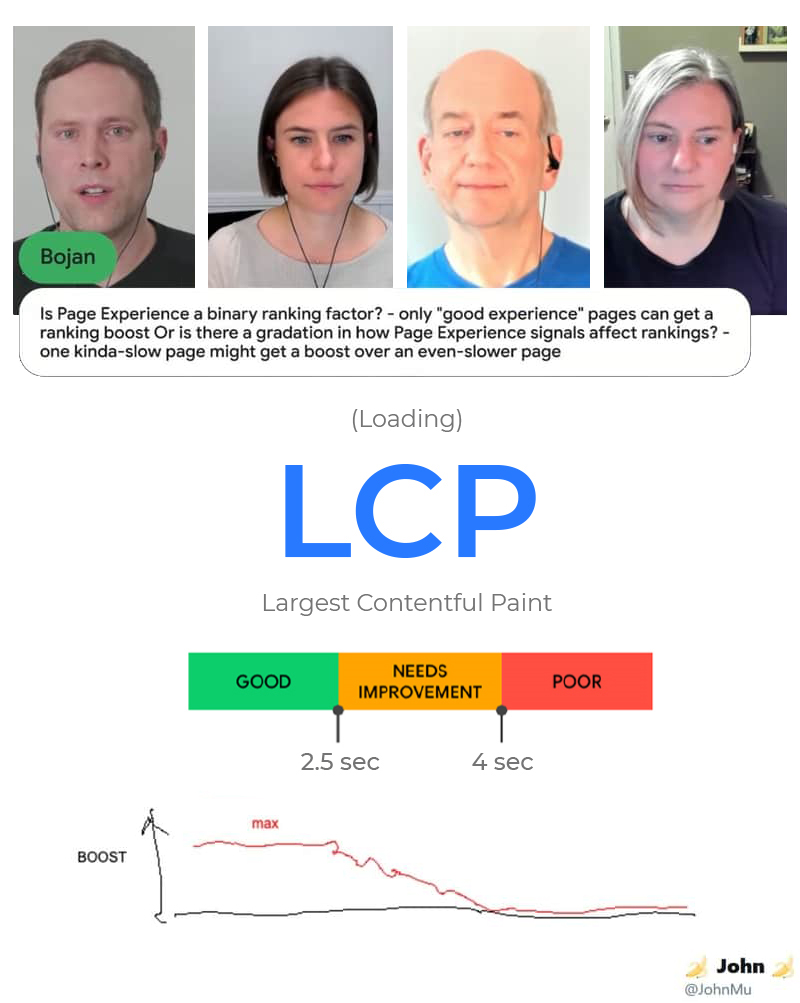

As it often happens with matters of SEO, in which so many people participate since it lies snugly between technology and marketing, there is a lot of chatter, hype, and misinformation circulating about the upcoming Google search update that will give a ranking boost to faster web pages. Getting clear understanding and straight answers is an arduous task, so it’s worth taking a step back to glance at the big picture.